Unless you are a time traveler and just landed in 2018, the app you are working on is using for sure some REST API (we’ll skip GraphQL for now) to CRUD the data it works with.

My preferred technology for implementing such an API is Asp.Net Core WebAPI so creating endpoints is quite a trivial task.

Protecting the endpoints from the unauthorized access is, unfortunately, a bit less trivial task but still easy in Asp.Net Core compared to how it was done in the past.

In Papiri we are using claims-based authentication using the JWT bearer tokens.

What is JWT?

JSON Web Tokens are an open, industry standard RFC 7519 method for representing claims securely between two parties. It is a de facto standard on the web for performing authorization which is not dependent on any technology or platform. If you check out the https://jwt.io/ you can see libraries in many different languages.

Basically JWT token is a base64 encoded string which consists of 3 parts separated by dots – like this one

eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiIxMjM0NTY3ODkwIiwibmFtZSI6IkpvaG4gRG9lIiwiaWF0IjoxNTE2MjM5MDIyfQ.XbPfbIHMI6arZ3Y922BhjWgQzWXcXNrz0ogtVhfEd2o.

You can take this string and paste it on jwt.io to see what it contains…

The first part of that string is called header and it contains things like what algorithm and token type are used.

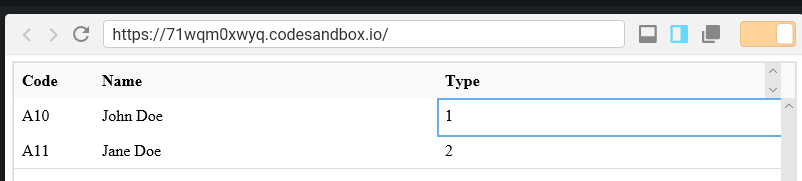

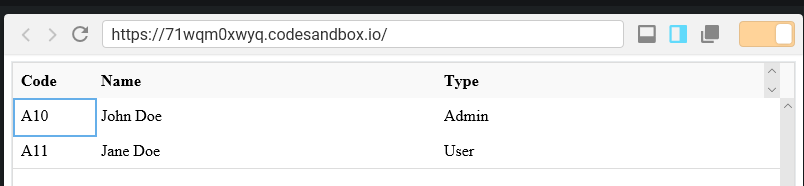

The second part of the string contains set of claims which we want to be sent as a part of the JWT token. Purpose of this part is to define claims which we want to be part of principal claims once the authorization is complete on API side.

The third part is a signature part which is an encrypted checksum of the previous two sections. Purpose of this part is to verify that the claims sent to the backend have not been tampered and thus making token invalid.

How to create JWT token?

First of all, you need app secret key which you will use to encrypt JWT signature. You DO NOT put that in your git repo, config files etc as if someone will get a hold of it he would be able to generate JWT token with any set of claims impersonating different roles etc.

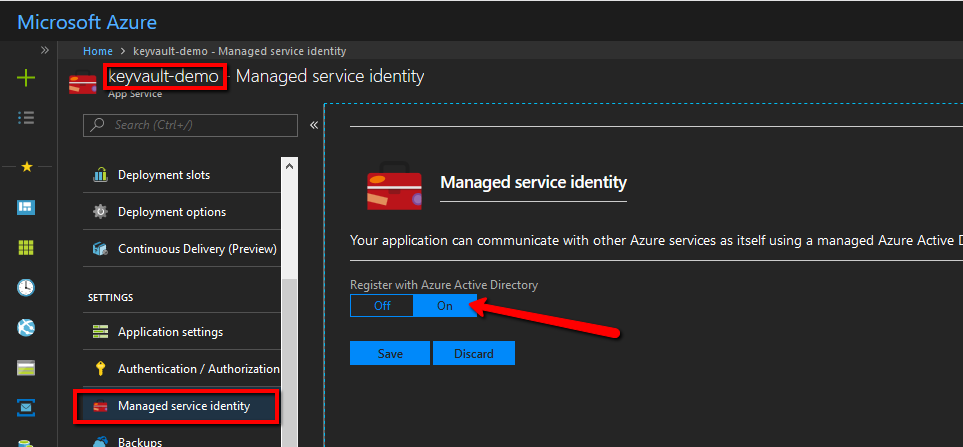

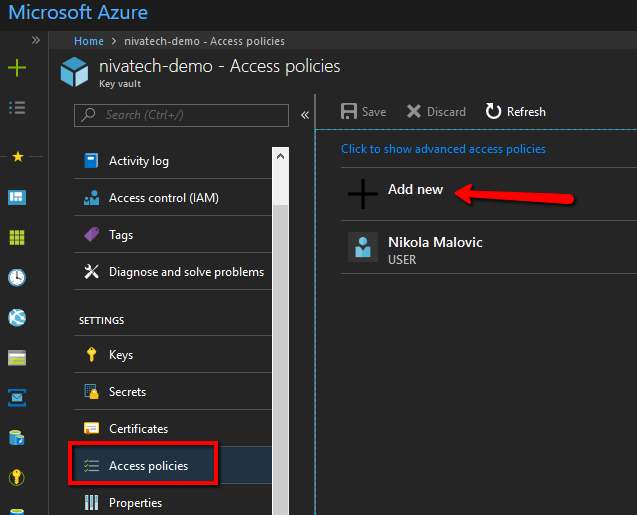

(You should store app secret it in Azure Key Vault but how to do that is part of some of the future blog posts)

var key = new SymmetricSecurityKey(Encoding.UTF8.GetBytes(secret));

var creds = new SigningCredentials(key, SecurityAlgorithms.HmacSha256);

Once we transform the secret into the credentials object we need to define the set of claims which will be sent in jwt token – here are claims we use in Papiri app

var claims = new List<Claim>()

{

new Claim(JwtRegisteredClaimNames.Sub, user.Id.ToString()),

new Claim(JwtRegisteredClaimNames.UniqueName, user.Email),

new Claim(JwtRegisteredClaimNames.GivenName, user.FirstName),

new Claim(JwtRegisteredClaimNames.FamilyName, user.FamilyName),

new Claim(JwtRegisteredClaimNames.Jti, KeyGenerator.GetSecureGuid().ToString()),

new Claim(JwtRegisteredClaimNames.Iss, issuer),

new Claim(JwtRegisteredClaimNames.Iat, DateTime.UtcNow.ToEpochMillis().ToString(), ClaimValueTypes.DateTime),

new Claim(JwtRegisteredClaimNames.Email, user.Email)

};

A few thoughts about the claims:

- You always need to have jti claim set to some random value on every token generation as a form of salting the signature which makes it harder for cracking

- iat claim is the claim which represents when the claim is issued at and you can then set the expiration policy which will invalidate claim after x seconds for example which reduces the risk of someone stealing the token and using it in issuing its own calls.

- As you can tell we send also a few data describing the user in more details which we need to simplify our microservice architecture and avoid cross service authorization calls.

All right, credentials and claims are ready so lets define the token expiration and create it!

var expires = DateTime.Now.AddMinutes(1);

var jwtSecurityToken = new JwtSecurityToken(

expires: expires,

claims:claims,

signingCredentials: creds);

string token = new JwtSecurityTokenHandler().WriteToken(jwtSecurityToken);

And there we are – we got the signed JWT token containing our claims.

How to send JWT token with API requests?

The best approach is to send it in Authentication request header in a form of Barer token – something like this

Authentication: Bearer JWT_TOKEN_GOES_HERE

In C# this is usually done like this

using (var client = new HttpClient())

{

client.DefaultRequestHeaders.Authorization = new AuthenticationHeaderValue("bearer", token);

...

}

How to authorize API calls with JWT bearer authentication header?

So now there is a call hitting your endpoint with the bearer token, how to use that in your endpoint? In Asp.Net Core that is super easy!

In your Asp.Net Core Startup.cs class find the ConfigureServices method and add this code there

services.AddAuthentication(JwtBearerDefaults.AuthenticationScheme)

.AddJwtBearer(cfg =>

{

cfg.TokenValidationParameters = new TokenValidationParameters()

{

ValidateAudience = false,

ValidateIssuer = true,

ValidateLifetime = true,

ValidateIssuerSigningKey = true,

ValidIssuer = issuer,

IssuerSigningKey = new SymmetricSecurityKey(Encoding.UTF8.GetBytes(APP_SECRET_HERE))

};

});

One more time: do not put app secret in your code – this is just demoware sample – wait until I describe how to store it properly in Azure Key Vault in next blog post 🙂

One more line needed to be added to Configure method

app.UseAuthentication();

Now when we have this set all we need to do is to put the [Authorize] attribute on controller we want to protect and that’s it!

If the token is valid the claims will be transferred to the set of claims User property controller has inside the action and you are good to go!

There you go – crash course in JWT tokens in Asp.Net Core!